Chapter 12 Supervised Learning

12.1 Introduction

12.1.1 Motivation

In the last two chapters we introduced linear and logistic regression models. The primary motivation lied in the interpretation of the coefficients, seen as the effect of explanatory variables on the response adjusted for the effects of other, potentially correlated, variables. Under some assumptions this even allowed us – holy grail – to conclude about conditional independence. Often, these models were considered as interpretable data generative models, supposed to reflect the underlying process.

In this chapter, we will take a supervised machine learning angle. Here, we are interested in good predictions rather than identifying the most predictive features or drawing conclusions about statistical independence. On the one hand, the goal is less ambitious. On the other hand, focusing on prediction accuracy alone allows unleashing a wide variety of modeling techniques. The application area is immense and contains the most prominent successes of today’s artificial intelligence including medical diagnosis, image recognition, automatized translation, etc. We will learn how to evaluate the prediction performance on an independent test set and avoid problems related to poor generalization of a model to an independent test set. Additionally, we will introduce random forests as an alternative machine learning model for both regression and classification tasks.

12.1.2 Supervised learning vs. unsupervised learning

Machine learning is the study of computer algorithms that improve automatically through experience49. Most machine learning problems can be mainly categorized into two: supervised or unsupervised problems. In supervised learning, the goal is to build a powerful algorithm that takes feature values as input and returns a prediction for an outcome, even when we do not know the value for the actual outcome. For this, we train an algorithm using a data set for which we know the outcome, and then use this trained model to make predictions. In particular, for building the model, we associate each set of feature values to a certain outcome. In unsupervised learning, we do not associate feature values to an outcome. The situation is referred to as unsupervised because we lack an outcome variable that can supervise our analysis and model building. Instead, unsupervised learning algorithms identify patterns in the distribution of data. Typically, clustering problems and dimensionality reduction are attributed to unsupervised machine learning problems.

A powerful framework to study supervised and unsupervised learning is statistical learning, which express these problems in statistical terms. Supervised learning then maps to the task of fitting conditional distribution \(p(y|\mathbf x)\) where \(y\) is the outcome and \(\mathbf x\) are the features (or fitting aspects of the conditional distribution: the expectation, etc.). Linear regression is one method we already saw. Unsupervised learning in contrast refers to the task of fitting the distribution of the data \(p(\mathbf x)\), or some aspects of it (covariance: PCA, mixture components: clustering, etc.)

This chapter focuses on supervised learning. In fact, logistic and linear regression are two classical methods in supervised learning for solving classification and regression tasks.

12.1.3 Notation

In this chapter, we will use the classical machine learning nomenclature:

- \(y\) denotes the outcome (or response) that we want to predict

- \(x_1, \dots, x_p\) denote the features that we will use to predict the outcome.

12.1.4 Basic approach in supervised machine learning

In regression and classification tasks, we have a series of features and an unknown numeric or categorial outcome we want to predict:

| outcome | feature_1 | feature_2 | feature_3 | feature_4 | feature_5 |

|---|---|---|---|---|---|

| ? | \(x_1\) | \(x_2\) | \(x_3\) | \(x_4\) | \(x_5\) |

To build a model that provides a prediction for any set of observed values \(x_1, x_2, \dots x_5\), we collect data for which we know the outcome:

| outcome | feature_1 | feature_2 | feature_3 | feature_4 | feature_5 |

|---|---|---|---|---|---|

| \(y_{1}\) | \(x_{1,1}\) | \(x_{1,2}\) | \(x_{1,3}\) | \(x_{1,4}\) | \(x_{1,5}\) |

| \(y_{2}\) | \(x_{2,1}\) | \(x_{2,2}\) | \(x_{2,3}\) | \(x_{2,4}\) | \(x_{2,5}\) |

| \(y_{3}\) | \(x_{3,1}\) | \(x_{3,2}\) | \(x_{3,3}\) | \(x_{3,4}\) | \(x_{3,5}\) |

| \(y_{4}\) | \(x_{4,1}\) | \(x_{4,2}\) | \(x_{4,3}\) | \(x_{4,4}\) | \(x_{4,5}\) |

| \(y_{5}\) | \(x_{5,1}\) | \(x_{5,2}\) | \(x_{5,3}\) | \(x_{5,4}\) | \(x_{5,5}\) |

| \(y_{6}\) | \(x_{6,1}\) | \(x_{6,2}\) | \(x_{6,3}\) | \(x_{6,4}\) | \(x_{6,5}\) |

| \(y_{7}\) | \(x_{7,1}\) | \(x_{7,2}\) | \(x_{7,3}\) | \(x_{7,4}\) | \(x_{7,5}\) |

| \(y_{8}\) | \(x_{8,1}\) | \(x_{8,2}\) | \(x_{8,3}\) | \(x_{8,4}\) | \(x_{8,5}\) |

| \(y_{9}\) | \(x_{9,1}\) | \(x_{9,2}\) | \(x_{9,3}\) | \(x_{9,4}\) | \(x_{9,5}\) |

| \(y_{10}\) | \(x_{10,1}\) | \(x_{10,2}\) | \(x_{10,3}\) | \(x_{10,4}\) | \(x_{10,5}\) |

We use this data to train the model and then use the trained model to apply to our (new) data for which we do not know the outcome.

Now… how do we evaluate our model? And how do we make sure our model will perform well on our (new) data?

12.2 Over- and Under-fitting

Analyzing whether our built model is generalizing well and capturing the trend of the data is an essential aspect of supervised machine learning. Two situations should be avoided: (1) that the model does not capture the trends of the data resulting in a high measured error between actual and predicted outcomes (under-fitting) and (2) that the model does not generalize well but fits the data used for training the model too well (over-fitting).

12.2.1 Example: polynomial curve fitting

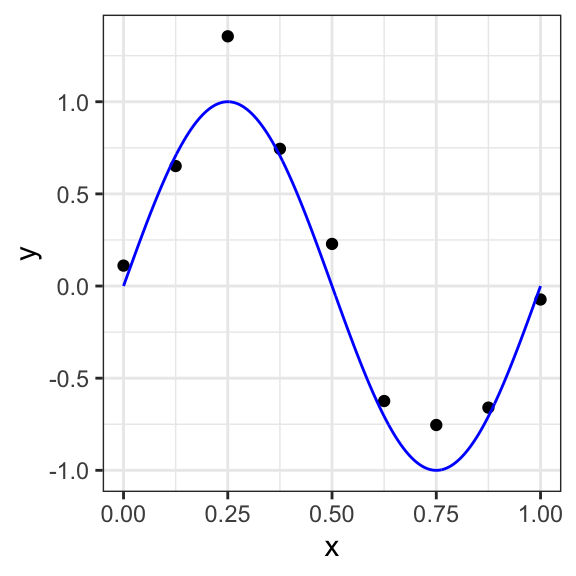

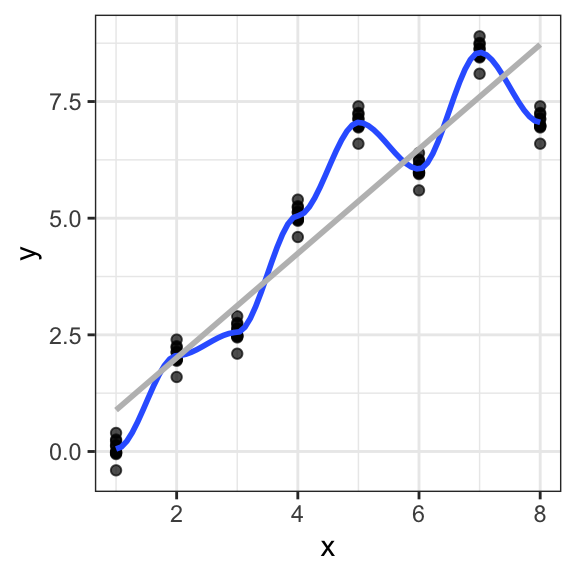

As an example, we consider a polynomial curve fitting as a regression task with a dataset of \(n =10\) points shown in Figure 12.1 , each comprising an observation of the input variable \(x\) along with the corresponding outcome variable \(y\). In this example, the data is generated from the function \[f(x)=\sin(2πx)\] (shown in blue) with added random noise from a normal distribution.

Figure 12.1: Data set of \(N =10\) points with a blue curve showing the function \(sin(2πx)\) used to generate the data

Assuming that we do not know the function plotted in the blue curve, the goal is to predict the value of \(y\) for some new arbitrary value of \(x\). For this, we want to exploit the given dataset of \(n\) data points in order to make predictions of the value \(y\) of the outcome variable for some new value \(x\), which involves implicitly trying to discover the underlying function \(f(x)=\sin(2πx)\). This is a difficult problem considering that we have to generalize from a finite dataset of only \(n\) points. Furthermore, the given data points include noise, and so for a given \(x\), there is a certain uncertainty regarding the correct value for \(y\).

We consider a simple approach based on curve fitting to make predictions for a given value of \(x\). More precisely, we fit the data using a polynomial function of the form:

\[ y(x, \boldsymbol\beta) = \beta_0 + \beta_1x + \beta_2x^2 + ... + \beta_mx^m = \sum_{j=0}^m \beta_j x^j \]

Here, \(m\) is the order of the polynomial function and \(x^j\) denotes \(x\) raised to the power of \(j\). The polynomial coefficients \(\beta_0,...,\beta_m\) are denoted by the vector \(\boldsymbol\beta\). Note that, the polynomial function \(y(x, \boldsymbol\beta)\) is a nonlinear function of \(x\) but it is a linear function of the coefficients \(\boldsymbol\beta\). Hence, a linear regression model (see Chapter 10) can be applied to find optimal values of \(\boldsymbol\beta\) assuming that we have selected a value for the order \(m\) of the polynomial fit.

As described in Chapter 10, an optimal linear model (with optimal \(\boldsymbol\beta\) values) can be found by minimizing an error function (sum of squared errors) that measures the misfit between the function \(y(x, \boldsymbol\beta)\) and the data points in the considered dataset.

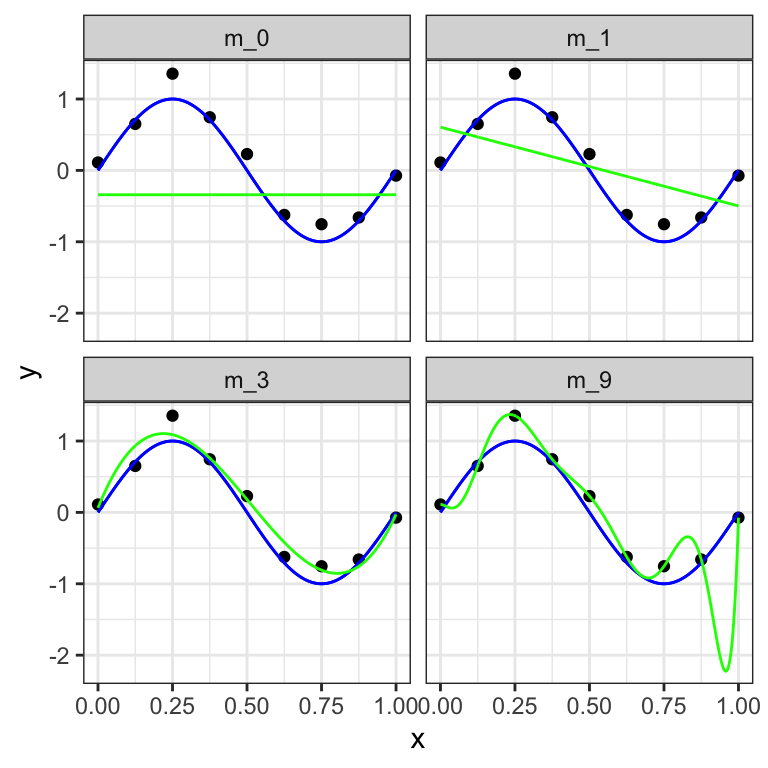

Selecting different values for the order \(m\) of the polynomial has a huge impact on the resulting model. In Figure 12.2, we show four examples of the results of fitting polynomials with different orders \(m = 0, 1, 3\) and \(9\) to our dataset of \(n=10\) points.

Figure 12.2: Plots of polynomials having various orders \(M\), shown as green curves, fitted to the considered data set with underlying expected value function in blue.

We can clearly see that the constant (\(m = 0\)) and first order (\(m = 1\)) polynomials give rather poor fits to the data and consequently rather poor representations of the function \(\sin(2πx)\). These two polynomial fits fail to capture the underlying trend of the data. A high error can be computed between the predicted and the actual data samples. These are examples of under-fitting.

The third order (\(m = 3\)) polynomial seems to give the best fit to the function \(\sin(2πx)\). By selecting \(m=3\) in the previous example, we observe an appropriate balance between capturing the trends in the data to achieve good generalization and making accurate predictions for outcome values.

When we go to a much higher order polynomial (\(m = 9\)), we obtain a polynomial that passes exactly through each data point. However, the fitted polynomial fails to give a good representation of the function \(\sin(2πx)\). The fitted curve considers each deviation in the data points (including noise). The model is too sensitive to noise and captures random patterns which are present only in the current dataset. Poor generalization to other datasets is expected. This latter behavior is known as over-fitting.

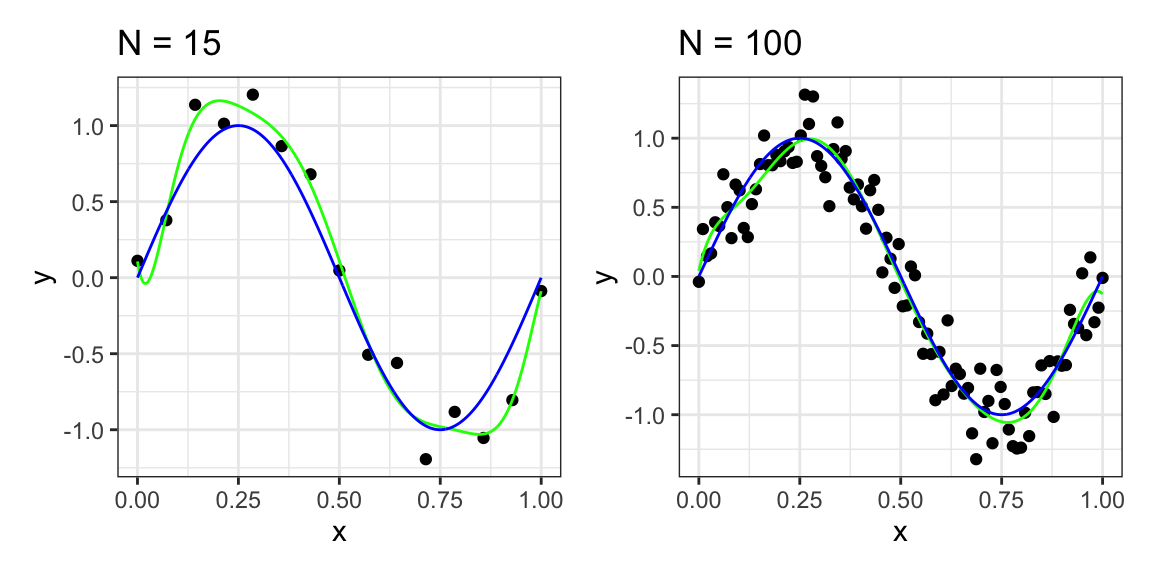

It is particularly interesting to examine the behavior of a given model as the size of the data set varies. We can see that, for a given model complexity, the over-fitting problem become less severe as the size of the data set increases. In our example, by considering not only \(10\), but \(n=15\) or \(n=100\) data points, we observe that increasing the size of the data set reduces the over-fitting problem (see Figure 12.3).

Figure 12.3: Polynomial curve fitting with \(n=15\) and \(n=100\) data points and polynomial order \(m=9\) in green and \(\sin(2πx)\) in blue.

This example, borrowed from the Christopher Bishop’s textbook, is very informative about the approach taken in and the issues faced by supervised learning.

First, supervised learning methods are more or less agnostic of the underlying processes. In our example, the underlying function is a sine. We may be looking at tides, an oscillating spring, etc. A physicist could come up with a model that leads to the right class of mathematical function. In supervised learning, we simply work with a generic class of flexible mathematical functions (here the polynomials, but there are many others), which can in principle approximate any function. One implication is that we will not aim at interpreting the coefficients. We would not pretend that the coefficient of say \(x^4\) has any physical meaning. A physicist would (and could!) in contrast interpret the amplitude or the period of the sine. In supervised learning, what guides our choice of the fit is data and only data. In particular, the complexity of the function we chose (here the order of polynomials) depends on how much data we have and not on theoretical considerations about the underlying process.

Second, the key issue is that it is easy with flexible mathematical functions to fit extremely well to a dataset. The challenge is not reducing the error on data at hand but the error on unseen data. Expected error on unseen data is called the generalization error. We will now investigate practical techniques to control the generalization error.

12.3 Splitting the dataset for performance assessment

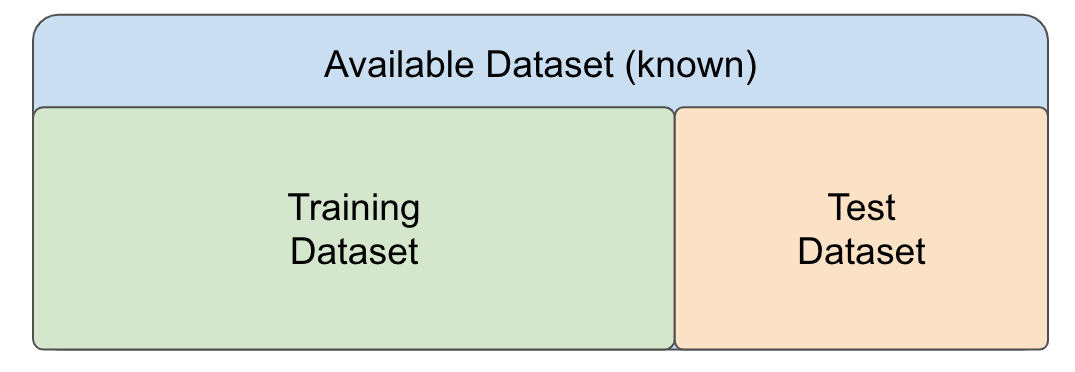

As previously stated, a supervised learning task starts with an available dataset that we use to build a model so that this model will eventually be used in completely independent datasets, as shown in Figure 12.4.

Figure 12.4: Illustration of an available set (in blue), whose outcome values are known and which is used to train a model, and an independent dataset (in grey), whose outcome values are unknown.

The first challenge arises when we do not know these independent datasets. However, in such cases, we still want to make sure that our model is not only working well when applied to the available dataset but can generalize well to independent unknown datasets. In particular, we want to make sure that we are not over-fitting to the dataset that the model was trained on.

How to minimize error on data we have never seen? We will make the following assumption:

We assume that the observations \(\mathbf x_i, i=1...n\) of our dataset and of unseen data are i.i.d., meaning they are independent obsvervations of the same population.

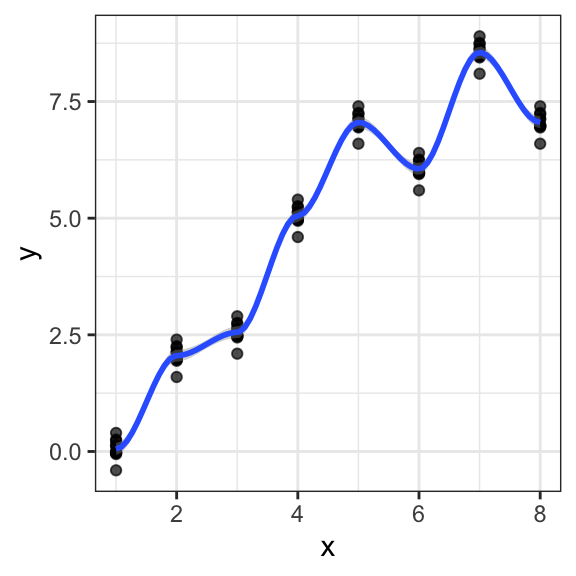

Under this assumption, one common strategy to evaluate the performance of the model on independent datasets is to select a subset of our dataset and pretend it is an independent dataset. As illustrated in Figure 12.5, we divide the available dataset into a training set and a test set. We will train our algorithm exclusively on the training set and use the test set only for evaluation purposes.

Figure 12.5: Separation of an available dataset into a training dataset (in green) and a test dataset (in orange) for building and evaluating a model.

Typically, we select a small piece of the dataset so that we have as much data as possible to train. However, we also want the test set to be large enough to obtain a stable estimate of the performance of the model on independent datasets. Common choices for the size of the train set are to use 10%-30% of the data for testing.

12.3.1 Over-fitting to the training dataset

Over-fitting can be detected when the measured error computed from a defined error function is notably larger for the test dataset than for the training dataset. Alternatively, one can use performance measurements such as precision or recall or visualize the performance of the model on the training vs. test dataset with ROC or precision-recall curves. Notable differences with the measurements or the plotted curves are signs of over-fitting.

In the previous example of the polynomial curve fitting, we can split the data consisting of \(n=10\) data points into train and test datasets and compare the computed error for different polynomial orders \(n\). For \(m=9\), the computed root-mean-squared error for the test dataset notably increases while the error for the training dataset decreases.

12.3.2 Cross-validation

Cross-validation is often used as a strategy to assess the performance of a machine learning model that can help to prevent over-fitting.

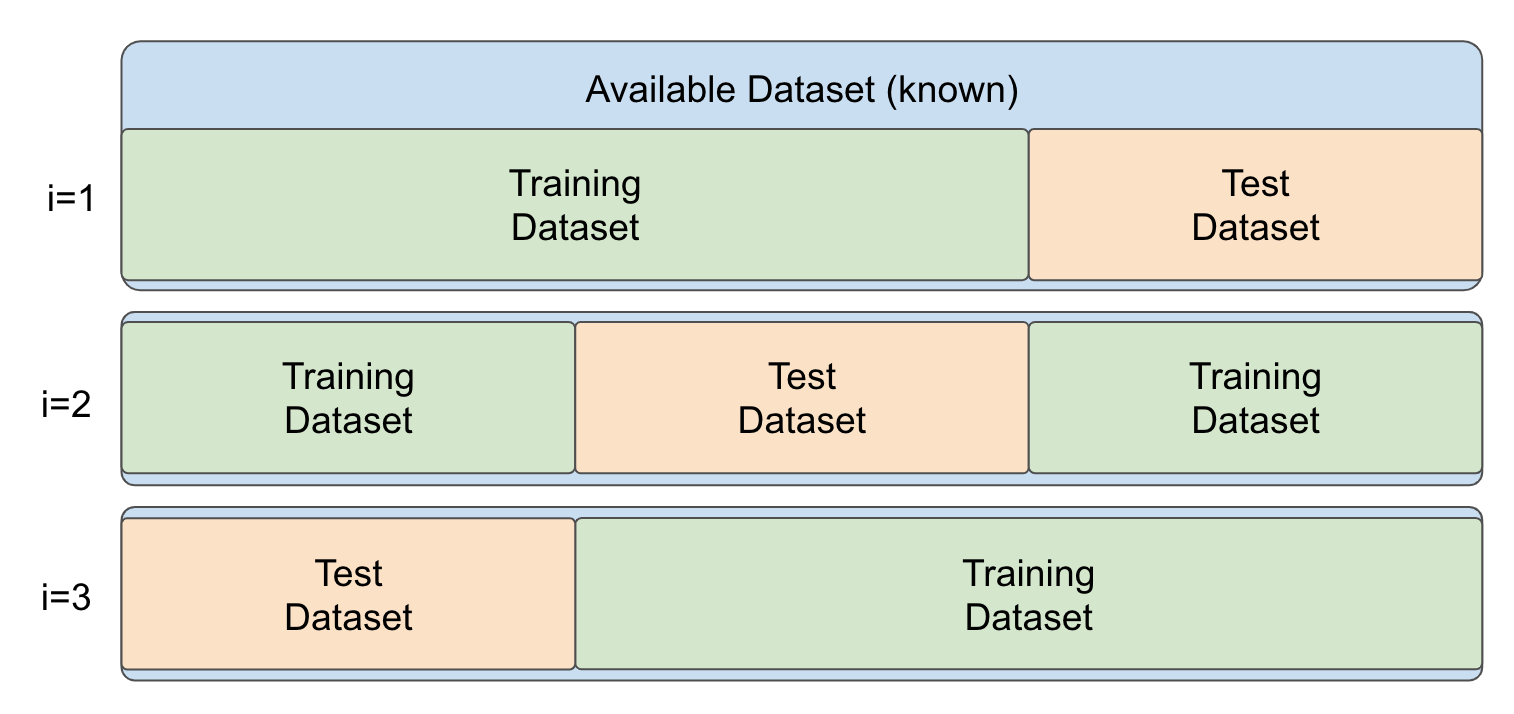

In cross-validation the data is randomly split into a number k of folds (e.g. 3, 5 or 10), as illustrated in Figure 12.6.

Figure 12.6: Schematic Illustration of cross-validation with \(k=3\) folds.

Then, a model is repeatedly trained on \(k-1\) folds and evaluated on the fold not used for training. In this manner, we train \(k\) models with a different training dataset on each fold \(i\) with \(i\in\{1,...,k\}\). After the \(k\) models have been trained and evaluated on the respective left-out folds, evaluation metrics can be summarized into a combined evaluation metric.

Now, how do we pick \(k\) for the number of folds? Large values of \(k\) are preferable because the training data better imitates the original dataset. However, larger values of \(k\) will have much slower computation time: for example, 100-fold cross-validation will be 10 times slower than 10-fold cross-validation. For this reason, the choices of \(k=5\) and \(k=10\) are usually considered in practice.

12.3.2.1 Pitfalls of cross-validation

For considering cross-validation one fundamental assumption has to be met, namely that the training samples and the test samples are independently and identically distributed (i.i.d.). As a trivial example, we would consider a non identical distribution if our training set consisted of red apples and green pears, but our test set also contained green apples. The issue of having non independent distributions may arise if we have data coming in clusters that we are not aware of (e.g: repeated measures, people from same families, homologous genes).

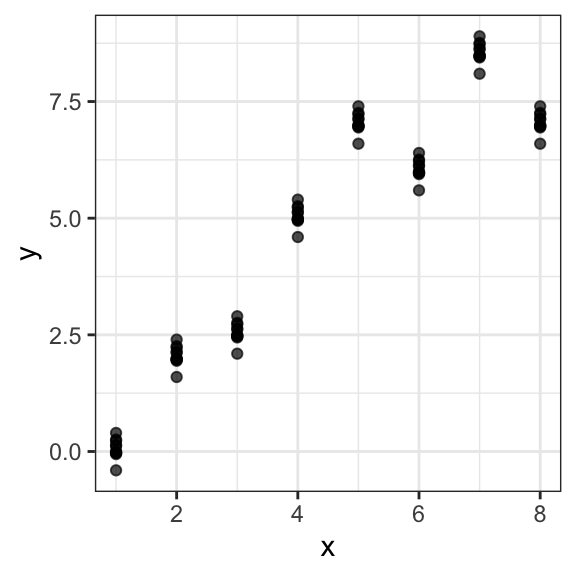

As an example of this issue, we consider a simulated dataset simulated_dt consisting of a feature variable x and an outcome variable y:

## x y

## 1: 1 0.00000000

## 2: 1 -0.03377742

## 3: 1 0.25579509

## 4: 1 0.22420401

## 5: 1 0.11684048

## 6: 1 -0.05580587

## 7: 1 0.39764426

## 8: 1 0.13625628

## 9: 1 -0.02500456

## 10: 1 -0.02142251We observe that the dataset contains repeated measures and that the between replicate simulated trend is x=y

Performing cross-validation at the level of individual data points will favor models that learn the clusters:

We need to perform cross-validation at the cluster level to learn the trend across clusters. However, this is difficult without application knowledge. Visualization techniques can help. Application areas may have their own strategies. In genetics, models are required to be replicated on independent populations (genetic associations on say Swedes must be replicated among say Germans). The issue also arises with temporal data. Cross-validation cannot be done by taking random subsets of past data points. For temporal data, it is common practice (and common sense) to test models my mimicking predictions of future time points. See a broader discussion of this issue by Roberts et al. (2017).

12.3.2.2 Cross-validation in R

In R, one approach to implement a cross validated model is using the caret package. The caret package consolidates different machine learning algorithms and tools from different authors into one library. It conveniently provides a function that performs cross-validation.

As an example, we implement a logistic regression model to solve the binary classification task from Chapter 11, which consists in predicting the gender of a person based on height values of him/herself and his/her parents. First, we load the heights dataset:

heights_dt <- fread("extdata/height.csv") %>% na.omit() %>%

.[, sex:=as.factor(toupper(sex))]

heights_dtThen, we define the validation specification with the help of the function trainControl(). Here, we set the method as cv for cross-validation with k=5 folds as the number argument. Additionally, we define twoClassSummary as the summary function for computing the sensitivity, specificity and the area under the ROC curve for performance measurement in binary classification tasks.

# install.packages("caret")

library(caret)

# generate control structure

k <- 5 # number of folds

fitControl <- trainControl(method = "cv", # cv for cross-validation

number = k,

classProbs=TRUE, # compute class probabilities

summaryFunction = twoClassSummary

)Next, we can train the model with the train() function. Here, we set the previously defined fitControl as the training control for implementing cross-validation:

# run CV

lr_fit <- train(## formula and dataset definition

sex~.,

data = heights_dt,

## model specification

method = "glm",

family = "binomial",

## validation specification

trControl = fitControl,

## Specify which metric to optimize

metric = "ROC",

)

lr_fit## Generalized Linear Model

##

## 253 samples

## 3 predictor

## 2 classes: 'F', 'M'

##

## No pre-processing

## Resampling: Cross-Validated (5 fold)

## Summary of sample sizes: 203, 202, 202, 203, 202

## Resampling results:

##

## ROC Sens Spec

## 0.9811125 0.911 0.9310541As we can observe, the lr_fit object returns the average values for the computed values of sensitivity, specificity and the area under the ROC curve of the k=5 trained models. The performance measurements for each model can be obtained as follows:

## ROC Sens Spec Resample

## 1 0.9967949 1.0000000 0.9615385 Fold1

## 2 0.9830769 0.9200000 0.9230769 Fold2

## 3 0.9953704 0.9583333 0.9629630 Fold3

## 4 0.9887821 0.9166667 0.9615385 Fold4

## 5 0.9415385 0.7600000 0.8461538 Fold5Additionally, the final model can be obtained by accessing the attribute finalModel from the lr_fit object:

##

## Call: NULL

##

## Coefficients:

## (Intercept) height mother father

## -37.4913 0.7307 -0.2899 -0.2360

##

## Degrees of Freedom: 252 Total (i.e. Null); 249 Residual

## Null Deviance: 350.4

## Residual Deviance: 83.05 AIC: 91.0512.4 Random Forests as alternative models

There is a plethora of supervised learning algorithms out there. Providing a reasonable survey of them is out of the scope of this module. We therefore introduce here a single supervised learning method beyond linear and logistic regression which is working well in many situations: Random forests. Just as linear and logistic regression should be the first baseline models to try, random forests can be seen as the first non-interpretable supervised learning model to give a shoot for. From this basis, many other options can be considered, often depending on the application areas.

Random forests can be applied to both regression and classification tasks. In this section, we introduce random forests as a further type of model for solving both tasks, as these are easy to understand and achieve state-of-the-art performance in many prediction tasks.

Random forests are based on decision trees. More precisely, they are an instance of tree-based ensemble learning, which is a strategy robust to over-fitting and allows fitting very flexible functions. Each random forest is an ensemble of several decision trees, which are machine learning models for both classification and regression tasks and will be introduced in the following section.

12.4.1 The basics of decision trees

A decision tree is a method that involves partitioning or segmenting the training data set into a number of simple regions. Typically, once the input space of the training dataset has been segmented, we can make a prediction for a new input sample by using the mean or the mode of the training observations in the region to which the new input sample is assigned to.

12.4.1.1 Decision trees for regression tasks

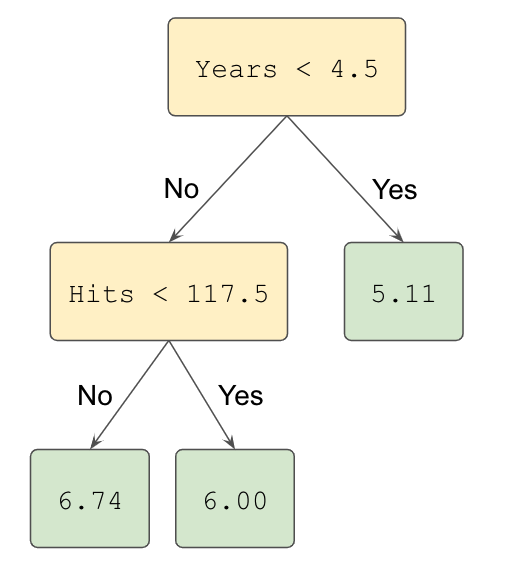

As an example for a decision tree model for a regression task, we consider the Hitters dataset 50 to predict a baseball player’s (log-transformed) Salary based on the feature Years (the number of years that he has played in the major leagues) and on the feature Hits (the number of hits that he made in the previous year). A regression tree for this dataset is shown in Figure 12.7 The constructed tree consist of 3 splitting rules. First, observations having Years<4.5 get passed to the right branch and observations having Years>=4.5 to the left branch. The predicted log-transformed salary for these players in the right is given by the mean log-transformed salary of all training samples in this node, which is in this case 5.11. Players assigned to the left branch are further subdivided by the feature Hits.

Figure 12.7: Illustration of a decision tree for predicting the logarithmic salary of a baseball player based on the features Years and Hits.

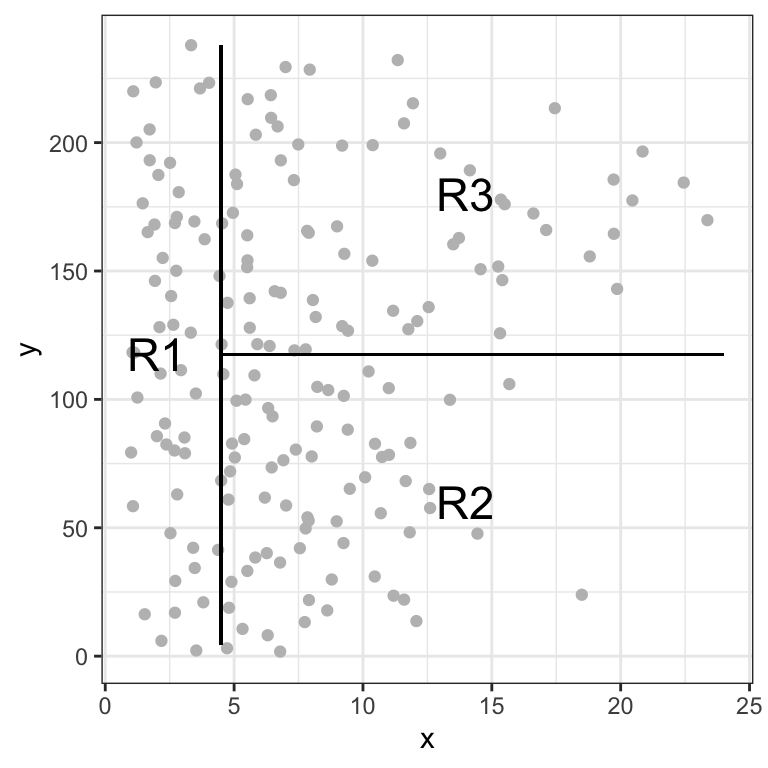

After the training is finalized, the tree regions the players into three regions: players who have played for four or fewer years, players who have played for five or more years and who made fewer than 118 hits last year, and players who have played for five or more years and who made at least 118 hits last year. A graphical representation of this resulting segmentation can be observed in Figure 12.8.

Figure 12.8: Illustration of the resulting three regions from the trained decision tree based on Hits and Years.

Formally, the three resulting regions can be written as:

\[ \begin{align} R_1 &=\{X | \text{Years}<4.5\} \\ R_2 &=\{X | \text{Years}>=4.5,\text{Hits}<117.5\}\\ R_3 &=\{X | \text{Years}>=4.5, \text{Hits}>=117.5\} \end{align} \]

Here, \(X\) is the dataset consisting of all training samples and the regions \(R_1\), \(R_2\) and \(R_3\) are the leaf nodes of the tree. All other nodes are denoted as internal nodes of the constructed decision tree.

Decision trees are easy to interpret. In our example, we can interpret the tree as follows:

Yearsis the most important feature for predicting the log-transformed salary of a player. Players with less experience earn lower salaries than more experienced players.If a player is more experienced, the number of hits that he made in the previous year is important for determining his salary.

Now… why and how did we choose the feature Years and the threshold of 4.5 for the top split? Overall, the goal of a decision tree for regression tasks is to find the optional regions \(R_1, R_2, ..., R_J\) that minimize the residual sum of squares (RSS) given by:

\[ \sum_{j=1}^J \sum_{i\in R_j} (y_i - \hat{y}_{R_j})^2, \]

where \(\hat{y}_{R_j}\) is the prediction for all samples in region \(R_j\), which is computed from the mean of the outcome value for the training samples in region \(R_j\)51.

Unfortunately, it is computationally unfeasible to consider every possible partition of the training dataset. This is why decision trees follow a top-down greedy approach to build the tree. The approach is top-down because it begins at the top of the tree with the complete dataset and then successively splits the dataset. Here, each split results into two new branches further down on the tree. Note that the approach is greedy because at each step of the process, the best split is made at that particular step.

In order to perform recursive binary splitting, we first select the feature \(X_j\) and a threshold \(s\) such that splitting the samples into the regions \(R_1(j,s) = {X|X_j < s}\) and \(R_2(j,s) = {X|X_j ≥ s}\) results into the greatest possible reduction in RSS. For this, we consider all possible features \(X_1,...,X_p\), and all possible values threshold values \(s\) for each feature. In greater detail, for any \(j\) and \(s\), we want to minimize the equation:

\[ \sum_{i\in R_1(j,s)} (y_i - \hat{y}_{R_{1}})^2 + \sum_{i\in R_2(j,s)} (y_i - \hat{y}_{R_{2}})^2, \] Note that finding optimal values for thresholds and features can be achieved quickly with the current advances in computer science assuming that the number of features is not too large.

The iterative process of finding features and thresholds that define splitting rules continues until a stopping criterion is met. This prevents to consider every possible partition of the training dataset. Stopping criteria include setting a minimum number of samples in a region or defining a maximum number of resulting regions.

Once the regions \(R_1,...,R_J\) have been created, we can predict the outcome of any given sample (from the test set) by assigning this sample to a region and returning the mean of the training observations in the region to which that (test) sample belongs.

12.4.1.2 Decision trees for classification tasks

As stated before, decision trees can be applied for both regression and classification tasks. The construction process of classification decision trees is very similar to the one that we previously described for regression tasks. One difference is that for a regression tree, the predicted outcome for an observation is given by the mean response of the training observations that belong to the same leaf node. In contrast, for a classification tree, we predict that each observation belongs to the most commonly occurring class of training observations in the region to which it belongs.

Additionally, in contrast to regression scenarios, RSS cannot be used as a criterion for making the tree splits for classification tasks. Typically, cross-entropy is used to fit the model (See Section 11.2).

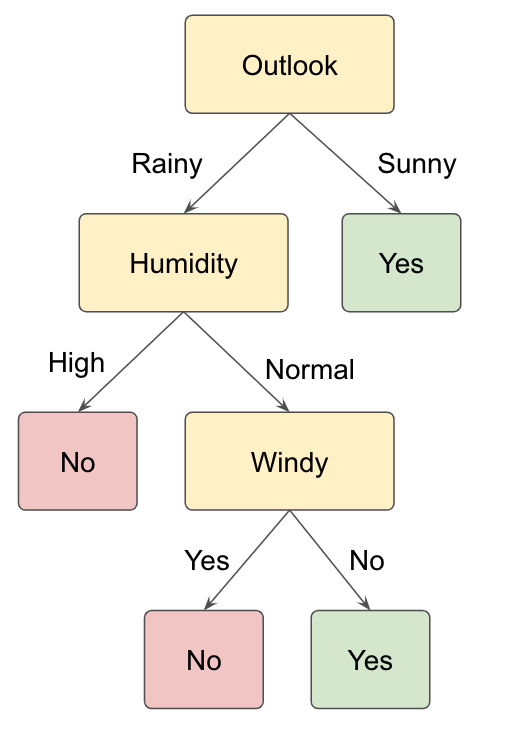

As an example we consider the binary classification task of deciding whether a person should play tennis on a given day or not. We consider the simulated data for training a model. The data contains four feature variables ("Outlook", "Temperature", "Humidity" and "Windy") and the outcome variable "Play.Tennis".

## Day Outlook Temperature Humidity Windy Play.Tennis

## 1: 1 Sunny 15.5 Normal Yes No

## 2: 2 Rainy 24.5 High Yes No

## 3: 3 Rainy 24.0 Normal No Yes

## 4: 4 Rainy 15.0 Normal Yes Yes

## 5: 5 Rainy 27.0 Normal Yes Yes

## 6: 6 Rainy 27.5 Normal No Yes

## 7: 7 Sunny 16.0 High No Yes

## 8: 8 Sunny 29.5 Normal Yes Yes

## 9: 9 Sunny 17.0 High No Yes

## 10: 10 Rainy 20.5 Normal Yes YesFigure 12.9 shows an example of a decision tree for solving the described classification task.The initial splitting rule tests whether the value of the Outlook feature of each sample is either sunny or rainy. Samples with the feature value being sunny are passed to the right node. If the outlook is rainy for a subset of the initial samples in the dataset, these samples are passed to the left node. Here, a second feature test is performed. This test involves checking the humidity feature for all samples. If the humidity value is normal, a third splitting rule is defined for checking whether the day is windy or not. In total, four regions result from the training process of the decision tree.

Figure 12.9: Example of a decision tree for the binary classification task of deciding whether to play tennis or not based on the features Outlook, Humidity and Windy.

Note that decision trees for classification tasks can be constructed with both numerical and categorical features, even though our tennis example only contains categorical data. Feature tests for numerical features can be formulated with an (un)equality for a certain feature, as described before for regression trees. For instance, a node in a decision tree could test whether the temperature feature is higher than 20 degrees Celsius (Temperature>20).

12.4.2 Random Forests for classification and regression tasks

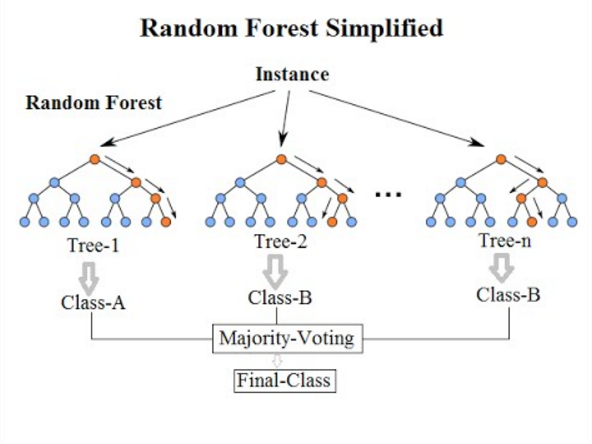

For constructing a random forest, the first step is called aggregation (or bagging), which involves generating many predictors, each using regression or classification trees. To ensure that the individual trees are not the same, we use bootstrap to induce randomness. These two features combined explain the name: the bootstrap makes the individual trees randomly different, and the combination of trees is the forest. In a random forest for classification tasks, the predicted output is the majority vote of the contained decision trees. In contrast, the predicted value of a random forest regressor is the mean of the predictions from each decision tree in the random forest.

Figure 12.10: Illustration of a random forest for classification tasks. Source: By Venkata Jagannath - https://community.tibco.com/wiki/random-forest-template-tibco-spotfirer-wiki-page, CC BY-SA 4.0, https://commons.wikimedia.org/w/index.php?curid=68995764

The specific steps for building a random forest can be formulated as follows:

Build \(B\) decision trees using the training set. We refer to the fitted models as \(T_1,T_2,...,T_B\).

For every observation in the test set, form a prediction \(\hat{y}_j\) using tree \(T_j\)

For continuous outcomes, form a final prediction with the average \(\hat{y} =\frac{1}{B}\sum_{j=1}^B \hat{y}_j\). For categorical outcomes, predict \(\hat{y}\) with majority vote (most frequent class among \(\hat{y}_1, ..., \hat{y}_B\)).

Now… how do we assure that the decision trees are different in the random forest, even if they are trained on the same training dataset? For this, we use randomness in two ways. Let \(N\) be the number of observations in the training set. To create the trees \(T_1,T_2,...,T_B\) from the training set we do the following with the feature and sample selection:

Create a bootstrap training set by sampling \(N\) observations from the training set with replacement. In this manner, each tree is trained on a different dataset. A technique also called bagging (from bootstrap aggregating).

Randomly select a subset of the features to be included in the building of each tree so that not all features are considered for each tree. A different random subset is selected for each tree. This reduces correlation between trees in the forest and prevents over-fitting.

12.4.3 Random Forests in R

We use function randomForest from the package randomForest for training random forests in R.

As an example, we train a random forest classifier for predicting the gender of each person in the heights_dt data table given the height values of the person and the person’s parents as before. We define the values for a few hyper parameters such as the number of trees in the random forest (ntree), the minimum number of samples in a leaf node (nodesize) and the maximum number of leaf nodes in a tree (maxnodes).

rf_classifier <- randomForest(## Define formula and data

sex~.,

data=heights_dt,

## Hyper parameters

ntree=100, # Define number of trees

nodesize = 5, # Minimum size of leaf nodes

maxnodes = 30 # Maximum number of leaf nodes

)

rf_classifier##

## Call:

## randomForest(formula = sex ~ ., data = heights_dt, ntree = 100, nodesize = 5, maxnodes = 30)

## Type of random forest: classification

## Number of trees: 100

## No. of variables tried at each split: 1

##

## OOB estimate of error rate: 10.28%

## Confusion matrix:

## F M class.error

## F 109 13 0.10655738

## M 13 118 0.09923664We remark that the number of threes (ntree), the minimum size of leaf nodes (nodesize) and the maximum amount of leaf nodes (maxnodes) in each tree are hyper parameters of random forests. These should be optimized (or tuned) for each application to achieve a better performance of the models. Two popular approaches are grid search and random reach. Hyper-parameter tuning is out the scope of this lecture. We refer the interested reader to the practical methodology chapter of the Deep learning book (Goodfellow, Bengio, and Courville 2016).

For predicting the gender of an input sample using the trained model stored in the variable rf_classifier, we can use the function predict as follows:

## height sex mother father sex_predicted

## 1: 197 M 175 191 M

## 2: 196 M 163 192 M

## 3: 195 M 171 185 M

## 4: 195 M 168 191 M

## 5: 194 M 164 189 M

## ---

## 249: 152 F 150 165 F

## 250: 172 F 165 188 F

## 251: 154 F 155 165 F

## 252: 178 M 169 174 M

## 253: 175 F 171 189 F12.5 Conclusion

This chapter introduced concepts from supervised machine learning for prediction tasks. Here, the main focus is to build and evaluate models that identify trends in the training dataset but are still able to generalize to (new) independent datasets. We described cross-validation as a strategy to prevent over-fitting.

Additionally, we introduced random forests as alternative machine learning models to linear and logistic regression for regression and classification tasks, since they are easy to understand and interpret and still show good performance in many application tasks. However, there are many more algorithms for solving regression and/or classification tasks in supervised learning. A few of the most popular methods include support vector machines (SVMs), (Deep) Neural Networks, k-Nearest Neighbors and Naive Bayes classifiers.

12.6 Resources

Christopher M. Bishop. 2006. Pattern Recognition and Machine Learning (Information Science and Statistics). Springer-Verlag, Berlin, Heidelberg.

Rafael A. Irizarry. 2019. Introduction to Data Science. Data Analysis and Prediction Algorithms with R .

Gareth James, Daniela Witten, Trevor Hastie & Robert Tibshirani. 2013. An Introduction to Statistical Learning with Applications in R.

The caret package manual: https://topepo.github.io/caret/

The Datacamp machine learning courses: https://www.datacamp.com/courses/machine-learning-toolbox

References

Show that sample mean minimizes the least square errors to a set of observations.↩︎